Although the articles were incredible and clearly explained the technologies, it also clearly demonstrated how complex ‘legacy’ MPLS technologies are. UPDATE: I recently found about PacketDesign and got very excited by the material they put out there. Their white paper on MPLS-TE is one of the best pieces I’ve seen on the subject! I urge you to check it out.

This article is divided into 4 sections: First, I mention reasons for MPLS forwarding. Second, I go through some of the motivations behind Traffic Engineering technologies. Then, I briefly explain Segment Routing, and I conclude with a tutorial on how ONOS can achieve TE using an SR SDN application on top of OpenFlow.

Why MPLS at all?

To reduce network state.

Why Traffic Engineering?

To save money!! $$$$

Diptanshu Singh explains this subject wonderfully, so I urge you to check his article if you need a more detailed explanation.

For instance, say the Comcast network in your neighborhood has 1 Gbps of VOIP and 4 Gbps of data traffic demand. It’s overprovisioned by 50%, so its 10G links suffice at the moment. Now, suppose its traffic increases 20% next year, sustaining this strategy would require an immediate upgrade of the infrastructure.

A Diffserv strategy would change resource allocation rates: One could instead allocate a 2x overprovision rate for VOIP and a 1.2x overprovision for data. Resulting in 2.4+6 Gbps total of bandwidth ( 1G*120%*2, voice data plus 20% increase times 2x overprovision rate) Next year, you would have 2.8+7.2 Gbps of data, still smaller than 10G. With this approach, Comcast can delay its backbone upgrade for 2 years and can still adhere to the SLA’s required for sensitive traffic.

With the first rule, your expansion rate is dictated by generic traffic growth because you must keep network utilization low. On the second case, your expansion rate is mandated by critical traffic growth and networking equipment life-cycle (at your convenience). Critical traffic is 5x smaller than best-effort, thus your expansion rate would be 5x lower if you don’t care about best-effort traffic.

Now you have the opportunity to reduce your expansion budget by a factor of 5 and invest that money on engineering power. I’m sure that’s what Google saw 10 years ago when it started heavily investing in its networking technology. Bad Vendors will often say ‘you don’t need QoS or Traffic Engineering’, the problem can be solved with more bandwidth. That’s a convenient message if you sell bandwidth.

Why Segment Routing?

I wanted to compare legacy technologies (RSVP, LDP) with SR, but I realized that is pointless. To me, the only reason you would use legacy is for backward compatibility with existent equipment. Don’t get me wrong, RSVP will get the job done. Also, you may not be able to afford replacing it with SR or maybe your RSVP infrastructure works perfectly and you already have proper processes in place.

That all said, SR is just simpler and better. To learn more about RSVP check for yourself: http://packetpushers.net/rsvp-te-protocol-deep-dive/. If you know nothing about SR check http://www.segment-routing.net/.

In summary, SR is a network architecture that allows the network to keep no flow-state. Rather than only forwarding packets based on IP destination address, they are forwarded based on the segment address. The network maintains shortest path forwarding state information to each segment and backup paths to implement fast reroute. Fast reroute by itself is worth money, SR TILFA allows for sub 50ms failure recovery.

Additionally, The architecture allows you to enforce loose source routing. For example, say, IGP OSPF will give a 40ms path, to steer your VoIP traffic through a node 104, you would just change your routing at the edge of the network to include that segment before the end destination.

Tutorial

I already wrote a tutorial on this 2 years ago. I’m just going to highlight the main points.

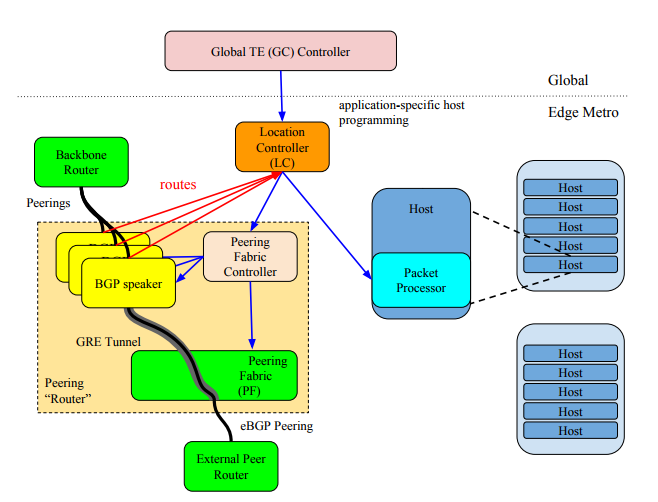

In this configuration, you have a cluster of 3 ONOS SDN controllers controlling a leaf-spine fabric. The entry-nodes, do a route lookup and encapsulate the packets with the MPLS label correspondent to the exit-node. The packet is then forwarded using shortest path based on the MPLS label. That’s basic IP forwarding. The cool thing here is the ability to programmatically set forwarding tunnels.

Let’s say you want all Netflix traffic to go through spine s105, thus making sure all Web and Voice traffic has 3 spines worth of bandwidth and thus lower delays, you could establish a tunnel in the following way:

A tunnel is defined as a set of LABELS, defining the path taken by a flow. The following command instantiates a tunnel called FASTPATH through the routers 101, 105, and 102 in that order.

onos> srtunnel-add FASTPATH 101,105,102 |

Then, a policy can be applied to a subset of traffic, for example, policy1 = tcp_port=80 >> fwd( TUNNEL_1)

onos> srpolicy-add p1 1000 10.1.1.1/24 80 10.0.2.2/24 80 TCP TUNNEL_FLOW FASTPATH |

This tunnels can be used to reinforce TE policies and guarantee SLAs and improve network utilization.

Conclusion

A Segment Routing network combined with a centralized controller for path computation can enable advanced Real-Time traffic engineering capabilities. In this way, Segment Routing is a perfect match for SDN.

The SDN applications have already been developed and made available in open-source projects like ONOS. The Segment Routing app mentioned has evolved to TRELLIS which is the networking fabric that supports the Cord project. I urge you to check their work.

Please reach out to me if you have any questions regarding how one could move forward and implement this.

Leave a Comment